AI-generated stories are stories written by humans, but watered-down and plagiarized. AI would not be able to write stories without

stealingtraining from human-made stories.Human stories are also plagiarized

Giving Shakespeare ye olde side eye

Some human stories are plagiarized.

Most human stories are similar to other stories without being plagiarized.

ALL AI stories are plagiarized.

Oh, so there are authors that never read a story before and just made it up in their heads?

There is no real difference between AI and humans in respect to borrowing elements from other stories.

AI stories may be lacking in some respects, but you can’t tell me human stories don’t recycle the same tropes

There is no real difference between AI and humans in respect to borrowing elements from other stories.

I say this is a case of reductivism

There’s a large swathe of people who want comfort food entertainment—unchallenging and similar to what they’ve enjoyed watching/reading/listening to before—at least some of the time. It makes sense that LLMs would be good at filling that need, since they can pretty much only generate more of the same.

People say they prefer food cooked by professional chefs over fast food, yet new study suggests that’s not quite true

get a professional chef to make you fast food and you will understand why they are professionals

People say they prefer meaningful relationships with other humans more than buggering goats. A new study shows that might not be true.

Game of Thrones was the most popular series in the world, despite having multiple characters and parallel story arcs. People underestimate other people.

This is kind of stating the obvious. Humans can write shit stories too.

Feels like news headlines are just competing for maximum cognitive dissonance. I guess that’s how you make a profit these days.

Would be curious to read the LLM output.

I find after reading a selection of LLM generated poetry/short fiction, you start notice signs of a generated text. It tends to be a bit too polished, without any idiosyncrasies and with almost too much consistency in the delivery.

EDIT: So I read the short story. To be honest, I am don’t think I would be able to tell whether this was LLM generated or written by a writer. There are some subtle signs, but it’s very much possible that I am seeing these signs because I knew it was LLM generated.

One thing to point out is that this is not really a short story, it’s less than a thousand words with honestly not much going on and there is lots of colourful descriptive text. I was expecting something in the range of 3K to 5K words.

After reading the “short story”, I am not sure I agree with the methodology. Some of their test statements include “I was interested in the struggles of these characters” and “This story deserved to be published in a top literary journal”. The story is not long enough enough to make meaningful conclusions about such test statements.

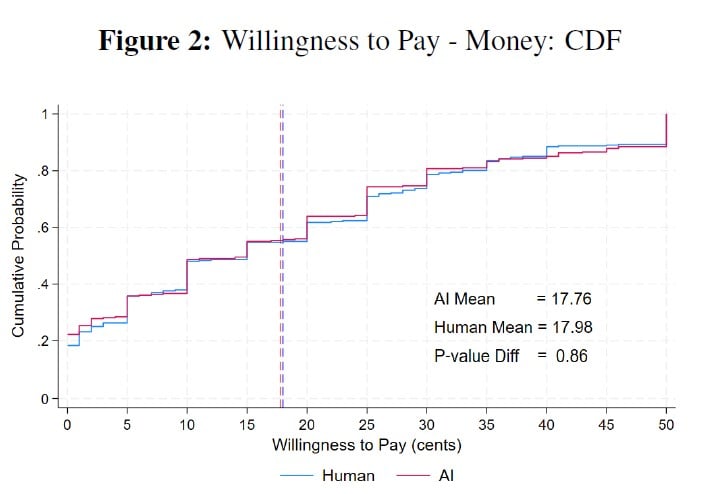

Their approach to willingness to pay also doesn’t make sense as it’s too short. Here is their graph for the TWP metic:

This seems artificial, no one is going to pay 30 cents in a real world scenario for such a text (irrespective of whether you think it was written by a person or if it was LLM generated). The respondents might rate it at being worth 30 or 40 cents as part of the survey, but that’s not the same thing as actually going through with a purchase. I will note they didn’t simply ask for a value and they did have a system tied to the survey payout; but this almost seems irrelevant.

deleted by creator

I find that LLMs also tend to create very placative, kitschy content. Nuance is beyond them.

Well… my bad-hearted woman loved a smooth-talking gambler, so I… ran him over with my train. Lord! Lord! Yes I… ran him over with my train.

By Bender

deleted by creator

A polished turd is still polished!

Would be curious to read the LLM output.

It looks like it’s available in the linked study’s paper (near the end)

Cheers!

Reed Johnson is a Senior Lecturer in Russian, East European, and Eurasian Studies

Martin Abel is an assistant professor of economics

Reed makes sense but wtf does economics have to do with it?

Econometrics has basically taken over for statistics in a lot of social sciences, for some reason. You rarely see a social scientist team up with a statistician - they team up with an economist, and they apply econometrics to whatever it is they are studying.

There could be a couple of reasons. Economists might be perceived as having a better understanding of “the real world”, as they are used to building predictive models around real world societal affairs, which is not really the job description of a statistician. Alternatively, it could be because they themselves are social scientists more than mathematicians, and they therefore “speak the language” of social sciences and are capable of interdisciplinary co-operation.

I think it’s a problem. More social scientists should learn to think critically of their methods and to do their own empirical research.

The freakanomics effect on a generation of academics

Economics involves understanding and predicting the behaviour of large groups of people, doesn’t seem all that far off topic here. And of course the way that people react to AI-generated content in products will be quite relevant to lots of people trying to market such products.