A balanced left-wing perspective of AI

Almost all casual AI views are split into two opposite positions: that it will overtake humans and take over the world (“singularity”), and that it has no intelligence at all and is a scam (“talking parrots”). Neither is correct.

First, we will discuss the source of intelligence in both humans and AI, and then we will discuss AI capabilities. After that, we will discuss the AI bubble and some common miscellaneous left-wing mistakes.

Intelligence and capabilities

Where does intelligence come from?

We are Marxists, and Marxism already gives us an answer about where intelligence and knowledge come from: it comes from real world practice and experience, and the usage of that experience to develop theories that explain the world.

See Mao’s “On Practice”:

Whoever wants to know a thing has no way of doing so except by coming into contact with it, that is, by living (practicing) in its environment.

If you want to know a certain thing or a certain class of things directly, you must personally participate in the practical struggle to change reality, to change that thing or class of things, for only thus can you come into contact with them as phenomena; only through personal participation in the practical struggle to change reality can you uncover the essence of that thing or class of things and comprehend them.

If you want knowledge, you must take part in the practice of changing reality. If you want to know the taste of a pear, you must change the pear by eating it yourself. If you want to know the structure and properties of the atom, you must make physical and chemical experiments to change the state of the atom. If you want to know the theory and methods of revolution, you must take part in revolution. All genuine knowledge originates in direct experience.

This is the theory-practice cycle, the scientific method, the “iteration” that is talked about in agile software development, materialism and its “realism” capitalist equivalent, etc.

Now, AI has human data, so it obviously does not need to learn everything via its own practice:

All genuine knowledge originates in direct experience. But one cannot have direct experience of everything; as a matter of fact, most of our knowledge comes from indirect experience, for example, all knowledge from past times and foreign lands.

But still, a human did practice to collect this information:

To our ancestors and to foreigners, such knowledge was–or is–a matter of direct experience, and this knowledge is reliable if in the course of their direct experience the requirement of “scientific abstraction”, spoken of by Lenin, was–or is–fulfilled and objective reality scientifically reflected, otherwise it is not reliable. Hence a man’s knowledge consists only of two parts, that which comes from direct experience and that which comes from indirect experience. Moreover, what is indirect experience for me is direct experience for other people. Consequently, considered as a whole, knowledge of any kind is inseparable from direct experience… That is why the “know-all” is ridiculous… There can be no knowledge apart from practice.

There are two main points here:

- Building knowledge requires interacting with the world, not just observing. A simplistic way of thinking about this is recognizing correlation vs recognizing causation. AI’s current knowledge mostly comes from interactions done by humans in the past (“indirect experience”).

- AI’s existing knowledge comes from past human experience, and to truly go beyond this, they must collect their own experience in the real world.

Now, some discussion and caveats.

This is not a validation of the “talking parrot” idea, where people claim that AIs are simply parrots and have no understanding of what they say. It doesn’t necessarily invalidate the parrot idea on its own, but it does not validate it either. If indirect experience makes something a parrot, then a human student in school would be considered a parrot as well, which is nonsense.

Also, AI can almost surely identify new patterns or connections in existing data that humans did not notice or study thoroughly. This could mean that it can reach or go slightly beyond human intelligence. Of course, not being able to interact (apply changes to a system and view the outcome) will make things difficult.

But once the AI has “maximized” the knowledge (theory) from existing data (practice), it will need to collect more data itself in the real world. This puts the idea of an AI “singularity” into question.

Knowledge is not found inward. The idea of a “know-all” AI that purely iterates internally is invalid. If it must experiment in the real world, then it will be limited by real world laws. It cannot simply update its weights in a high-speed compute cluster: it must physically interact with the world. This means robots, the components for the robots, the materials, the energy, the production.

Again, Mao:

Man’s knowledge depends mainly on his activity in material production, through which he comes gradually to understand the phenomena, the properties and the laws of nature, and the relations between himself and nature; and through his activity in production he also gradually comes to understand, in varying degrees, certain relations that exist between man and man. None of this knowledge can be acquired apart from activity in production.

Now, if existing human experience is enough to go far beyond human intelligence, it won’t make a difference. Whether it is super-intelligent or super-super-intelligent will not matter much. But my knowledge of how theory and practice work makes me believe it will be difficult for AI to go very far on just human data.

The idea of duplicate vs new information

I did not see Marxists say this, but I think it is worth considering: As more knowledge is collected, more and more of the available information in the world will simply be duplicate information. This means that it will be harder and harder to find new information. This could mean that it requires more searching, and it could also mean that it will require more advanced experimentation, ie. more advanced technology and production.

Ignoring the production aspect, it implies that progress will be logarithmic rather than exponential. The funny thing about this is that current LLM progress has been shown to be logarithmic when compared with training compute. But in my opinion, it is a bit pseudoscientific to assume that this is evidence, as architecture improvements and context length can drive improvements instead.

So that is where intelligence comes from. This is true regardless of whether we are talking about humans or AI.

Let’s talk about humans in particular.

Where does the vast majority of human intelligence come from?

Humans are able to have a basic understanding of the physical world around them within the first few years of life, and are able to reach “adult”-level human intelligence within 18-25 years.

From this, people incorrectly conclude that AI should be able to reach human intelligence from a single lifetime of human data. This is completely false because it fails to recognize where the vast majority of human intelligence comes from.

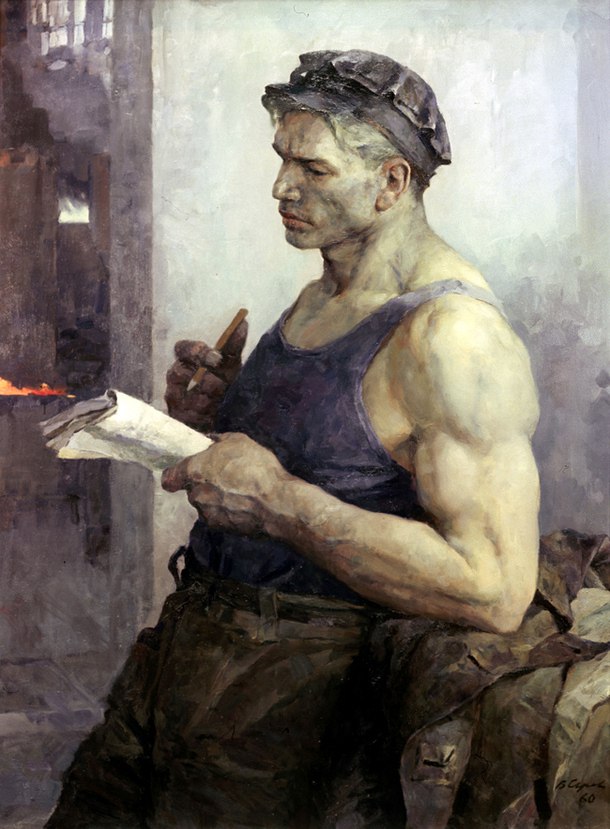

Evolution is the true source of human intelligence. An 18-year-old human has 18 years of human experience, and 1 billion years of evolutionary experience.

Evolution, a genetic algorithm, is orders of magnitude more complex and advanced than any human-developed learning algorithm. It has all types of complex behaviors, from genes turning on and off based on living conditions, self-improvement of the evolutionary learning algorithm itself, symbiotic relationships with foreign bacteria, recombination, etc.

The scale is dramatically different as well. This algorithm did not run in an imperfect and oversimplified simulation, in a single data center, for a few months. It ran in real life, on a planetary scale, for a billion years.

There are animals that are born knowing how to swim, how to follow their parents, how to hunt, and how to reproduce. This intelligence can not be obtained in a few minutes or hours of learning; it comes from evolution.

In comparison, humans take years to even be able to comprehend the world around them. But make no mistake: this is not evidence that humans do not receive any knowledge from birth. The human brain’s architecture is far more advanced than any human-developed algorithms. Not only is it advanced in general, it is prepared to learn both vision and language. The fact that these areas are where the majority of AI developments were made is not a coincidence: vision is how we perceive the world and language is how we communicate what we perceive to others. Evolution designed the brain for these tasks and many others, and that is why the brain can learn so much in such a short time.

It is extremely naive to assume that we can simply bypass 1 billion years of evolution and easily surpass this level.

Tech workers have a habit of going into new domains and incorrectly assuming that their understanding is superior to the experts within that domain. It frequently causes failure in tech projects. In my opinion, that is what is happening in AI, but at an absurd scale. Although I am not religious, trying to create intelligence is effectively “playing god”. It is a bit delusional to think that this would be an easy task.

It does not make sense to compare human lifetime learning to pretraining. It is more accurate to compare it to finetuning, calibration, decompression, and transfer learning. Pretraining, ie. starting from scratch, is more similar to evolution than it is to lifetime learning. It is completely predictable for it to be a slow and tedious process.

5-10 years ago, it almost felt like I was the only person in the world that believed that evolution was dominant. But this view is becoming more common. See this tweet from Andrej Karpathy:

Animal brains are nowhere near the blank slate they appear to be at birth. First, a lot of what is commonly attributed to “learning” is imo a lot more “maturation”. And second, even that which clearly is “learning” and not maturation is a lot more “finetuning” on top of something clearly powerful and preexisting. Example. A baby zebra is born and within a few dozen minutes it can run around the savannah and follow its mother. This is a highly complex sensory-motor task and there is no way in my mind that this is achieved from scratch, tabula rasa. The brains of animals and the billions of parameters within have a powerful initialization encoded in the ATCGs of their DNA, trained via the “outer loop” optimization in the course of evolution. If the baby zebra spasmed its muscles around at random as a reinforcement learning policy would have you do at initialization, it wouldn’t get very far at all. Similarly, our AIs now also have neural networks with billions of parameters… TLDR: Pretraining is our crappy evolution.

When people think about the “singularity”, they often think of an instantaneous process. But we are already in the singularity: evolution was the first step, and human AI will be the second step. That first step has been running for a billion years, and humans have existed for over 100k years. Even if the scaling of AI intelligence were truly exponential (which is not guaranteed), that does not mean instantaneous. The second step after evolution still has not happened.

There are definitely limitations to comparing evolution with AI:

- Although DNA has excellent storage density and stores an absurd amount of data, the majority of it is probably redundant.

- The majority of experiences are not taken advantage of by evolution. The vast majority of the progress comes from survival/reproduction, which ignores more minor life experiences and simply focuses on life and death. Only a small amount of progress comes from epigenetic and cultural memory.

- While imperfect, simulations are valuable and the vast majority of the tiny details and imperfections are not important when learning.

- Human-developed technology can do some things dramatically better than evolution, which is restricted to general biological approaches.

There is one more very significant limitation, which I will go into next.

Where does the intelligence in AI come from?

Evolution starts from scratch, and I said that pretraining is comparable to evolution. But that is a bit of a simplification, because human-developed AI does not actually need to completely start from scratch. Instead, it can continue from where evolution left off.

The more extreme and theoretical example would be trying to recreate or clone the human brain. But there are simpler methods that are already being done: learning from human labels (vision classification), and learning from human language (LLM pretraining).

Human unconscious knowledge is far more valuable than human conscious knowledge. Humans are frequently able to complete tasks in ways that we are unable to explain. For instance, we are easily able to process visual information and identify objects, but we struggle to code non-learning-based computer vision algorithms ourselves. Human-labeled data, such as image data, is capable of transferring this information to AI, even when we are not able to comprehend it ourselves. However, this requires manual labeling, and also is usually a binary or categorical classification: when we label an object in an image, we are not transferring all the information we know about this type of object, or anywhere close.

Language is much more useful. First of all, it is a byproduct of human production and life. We constantly write and speak, and the internet records a lot of it. Second, language holds a huge amount of unconscious knowledge.

Think of this sentence: “The leaves are orange”. Putting it simply, this sentence is telling us the color of a set of objects. But there is a lot of implied meaning there. What is a color? What is a leaf? What is a tree, a plant, an environment? What does it mean that the leaves are orange? What weather season is it? What season is next? What are weather seasons? What is the earth, and where did it come from? Who lives on the Earth, and what do they know? It can go on forever. With enough examples, a large amount of human knowledge can be transferred.

Human language is effectively distilled data, ie. data that provides a shortcut to reaching human-level intelligence. So while I said pretraining is similar to evolution or training from scratch, you could also describe it as being similar to training on embeddings or probabilities taken from the human brain.

How much information does human language hold?

How much information does human language hold? Past Marxists, specifically Joseph Stalin, give us an answer:

Language is one of those social phenomena which operate throughout the existence of a society. It arises and develops with the rise and development of a society. It dies when the society dies. Apart from society there is no language. Accordingly, language and its laws of development can be understood only if studied in inseparable connection with the history of society, with the history of the people to whom the language under study belongs, and who are its creators and repositories.

Language is a medium, an instrument with the help of which people communicate with one another, exchange thoughts and understand each other. Being directly connected with thinking, language registers and fixes in words, and in words combined into sentences, the results of the process of thinking and achievements of man’s cognitive activity, and thus makes possible the exchange of thoughts in human society.

To put it directly, it holds almost all of it, conscious and unconscious. I previously said that unconscious human knowledge is much larger than conscious human knowledge, and that humans are unable to comprehend their unconscious knowledge. But in terms of learning algorithms, humans can consciously create datasets which will hold their unconscious knowledge, which can be learned from. That is what language is.

If you ask an AI, what color is a zebra, it will say black and white. You may think “it does not know what black and white are, it can not see, it is just memorized”. But does it really matter whether it can see the color in reality? In terms of RGB, it knows that white is the max value for all three primary colors (255,255,255) and that black is the minimum value for every color (0,0,0). It knows that white is the combination of multiple human-visible wavelengths of light. It even knows what those numerical wavelengths are. It knows that black is the absence of light as well. It does have knowledge about color, even if it does not have all knowledge about color. Similar to how we can not see xrays but can comprehend them and transform them into formats that we can see (visible light images), AI can do the same.

Is someone who is blind from birth completely unable to understand what color is? Is it impossible for this person to reach human levels of intelligence because they lack vision capabilities? It is an invalid line of thinking.

(Sure, there is vision knowledge from evolution, but it was never decompressed or calibrated. It still is absurd.)

This is sometimes called symbolic knowledge, and is considered a sign of intelligence. It is funny that many people think the opposite is true today.

How intelligent are today’s AIs?

I like to describe today’s AI as 99% memorization, 1% intelligence. Note that it is mostly memorization rather than intelligence, and note that it is not 0% intelligence. There is some intelligence. It would not be able to form coherent sentences, solve open-ended problems with any degree of success, or do much of anything beyond a single task if it did not have some intelligence.

At the same time, this level of intelligence is still probably below the level of a dog or cat. People see it solve software engineering problems, or speak in coherent sentences, and think that this is impossible. What is going on here is that its intelligence is targeted towards useful areas. A dog or cat has a much better understanding of intuitive physics and how to do their jobs as animals. This is knowledge that is primarily unconscious for humans, and a lot of animals know it, so we take it for granted.

Again, there is symbolic or indirect knowledge, similar to human conscious knowledge of physics: The AI could absolutely use physics calculations and mathematics to predict how an object will move, with similar accuracy to human or animal intuition.

Vision capabilities

Today’s state-of-the-art AIs are capable of comprehending an image, including what objects are in it and what is happening. Their vision is comparable to a low-resolution image, ie. the AIs struggle to see small details. Regardless, they can see and comprehend.

General problem-solving and agentic capabilities

Generally, complex problems are solved by breaking them down into multiple smaller and simpler problems, and solving step by step. This means that the problem requires less intelligence. Although they are terrible at it, and are quite dependent on human supervision, today’s AIs are capable of breaking problems down step by step like this.

Outside of disabilities, raw human brainpower does not significantly vary from person to person. Although it may not seem like it on the surface, oftentimes the difference between an expert human and a highly skilled human in completing an expert-level task is simply time. It may be a very large amount of time, but it can be done.

Theoretically, an AI can work for a very long time and excessively break down a problem into smaller and smaller pieces until it completes the task. Because it can think (output words) very quickly, it does not matter that it takes way more thought than a human. This is the motivation behind AI agents and “agentic” AI: by allowing it to solve problems step by step, and allowing it to interact with testing environments to check its work, it will be able to solve human-level problems.

Practically, there is a very major limitation with today’s AI that should be considered the #1 priority for AI researchers: context length.

Context Length

Context length is the maximum length of conversations that AIs can handle.

Technically, today’s AIs typically can have context lengths of 1 million tokens or more. The provider may set this limit, or the hardware or algorithms may force this limit.

But in reality, the intelligence collapses much quicker than that. By 10k tokens there is a significant reduction in intelligence, and by 100k+ tokens the AI can easily become incoherent in an agentic task. For example, it may make nonsensical decisions, incorrectly remember past events and actions, repeat a set of actions over and over, or focus on a random unimportant part of the problem for an excessively long time.

10k words is not a lot. There are many software engineering problems that take more than 10k words to explain the problem (code file contents), so the limit is reached even without agentic behavior.

Remember my claim that hard problems can be solved with lower intelligence and experience given a large amount of time? Today’s AIs do not have that time.

This is partially an architectural limitation. The transformers used in today’s state-of-the-art AIs generate the next token by combining all previous tokens in the conversation. In other words, they have quadratically increasing complexity relative to conversation length. This is not ideal at all.

There are two possibilities:

- We can improve context length without moving on from the transformer architecture.

- We must move on from the transformer architecture.

The first possibility might mean that real agentic AIs are only a few years away. The second possibility might mean it will take 10+ years. But it is hard to predict.

What will be the effects of a real agentic AI?

A lot of the hype around AI is based on the belief that human or superhuman AI is not far off, and that this would cause dramatic and destabilizing changes to society by automating all work, including physical labor via robotics. A lot of the doom around AI is based on the belief that AI will not reach human level, will not be able to do the vast majority of human work, and will therefore be near-worthless. It is always these two extremes: human level or junk. This is invalid.

I personally think that human-level AI is very unlikely to happen, because beating evolution so easily seems very unrealistic. But that does not mean it can not cause dramatic changes to society.

Why? Because the vast majority of human jobs do not require human intelligence. For example, manual labor. Manual labor typically involves moving items from one place to another, searching for items, moving around an environment, etc. These tasks are normally very hard for past algorithms because those algorithms can not comprehend the world or take natural language instructions. This meant that they had to be manually trained or programmed for each task, which is infeasible and not a good approach. But today’s LLMs are capable of perceiving things and taking natural language instructions. Their performance is definitely weak, but they already can do those tasks to some degree. Combined with automating a portion of white collar labor, this could mean that a majority or near majority of jobs could be automated.

This can happen without significant improvements over current intelligence. Context length is currently more important than intelligence, and speed and cost are as well. If the models degrade after only a few seconds of time, they will not be suitable. Similarly, if they have multiple seconds of latency and cost $5+ an hour, they will not be suitable. But they already can perceive the world, and can break a problem down into steps. If context length can be fixed, then we will not be far off from this capability, even without big increases in intelligence. At that point, AI robotics will be possible.

Conclusion

Even though super-intelligence is quite unlikely to happen any time soon, AI is still not far off from causing dramatic changes to society.

The American AI bubble

So far, this text has been very optimistic about the value of AI compared to typical left-wing views. AI does have some intelligence and will soon have a large amount of real-world automation value.

This does not mean that AI is not a bubble in America. Even if AI will be worth hundreds of billions of dollars to the economy (which is almost guaranteed), American investors have invested more than this, and have done so in a very wasteful way.

There are two issues with American AI investment

- Real-life physical input costs are a major limitation to the effects of AI.

- American AI investment is extremely wasteful. This waste manifests as both a premature investment in rapidly depreciating technology and a damaging diversion of essential resources, like energy and skilled labor, from other economic sectors.

Physical input costs: physical robots, natural resources, and the price influence of AI

Robots

Without a robot to control, AI simply can not do robotics.

This report from 2014 claims that software is 40-60% of the cost of a robot. This implies that even if AI manages to bring that software cost down significantly, there will only be up to a 60% cost reduction.

Note that these numbers are probably for larger-scale tasks. For smaller-scale tasks, since the software cost is normally a fixed cost, AI will probably cause a much bigger cost reduction. Also, this source is from 2014 which is not ideal, but I do not have a better source at the moment.

Still, this is not a 99% reduction or anything similar for large scale robotics. Even if robots could be purchased at much higher quantities without a significant change in prices (extremely unlikely; even a few years ago robot components were being bought out multiple years in advance), there would only be a price reduction of less than an order of magnitude. This is not the ultimate destabilizing change that US markets are expecting.

Physical inputs

Both physical products and physical robots require natural resources, which have costs that can not simply be automated away. Identifying the proportion of final costs of products that comes from natural resources is beyond the scope of this text, but they are significant.

Delayed reactions

Even if AI is capable of automating a large amount of work, the actual implementation and the response in pricing will likely take decades to appear. This means that short-term speed improvements are insignificant in the long run.

Waste

GPU degradation

It is well-understood that datacenter GPUs typically only last a few years. This means that the aggressive purchase of GPUs today will only bring a few years of capability.

Computational costs and Moore’s Law

Moore’s Law for CPUs is considered to be dead or nearly dead due to physical laws and limitations. That is not necessarily the case for GPUs. GPUs do operations in parallel on a large number of cores, with the compromise that those cores are much slower than CPU cores. As a result, GPU cores can continue to improve in both speed and quantity. So far, GPUs have continuously improved in price, power efficiency, and compute.

I am not trying to prove here that Moore’s Law is relevant in particular, but that the effects of Moore’s Law are relevant. In the past, when CPUs were still improving dramatically, it was commonly understood by engineers and businesses that if current CPUs did not meet your requirements, you could simply wait a few months to a few years, and your requirements would be met. This meant that efficiency improvements were unnecessary.

Nvidia is continuing to output better and better GPUs each year. Unless you believe in super-intelligence, it does not make sense to rush to make AI immediately. Simply waiting a few years may bring a huge cost reduction.

This is true for both training and inference. Even though AI is suitable for robotics, it is still very costly to run. So even if AI is capable of it, it will not be ready for production use. Buying compute early adds a huge premium, and every day that context length is not solved means a portion of that premium is wasted.

Inference and non-AI pricing effects

AI has caused significant increases in the price of GPU compute, as well as general compute. This has affected areas such as gaming, traditional computer vision, general cloud compute costs, etc. It has also affected inference costs. AI inference would likely be significantly cheaper if GPUs were not used as much on training.

Electricity costs

Electricity costs are exploding in the US due to AI.

GPU power efficiency

GPU power efficiency improves over time, meaning that electricity costs would be lower if the current GPU usage increases were delayed by some time.

The time required to increase electricity supply

Power generation is a very intensive/industrial process. It requires traditional power plants, nuclear power plants, green energy sources such as solar, etc. These take years to create.

This is particularly true for nuclear power, the most efficient and viable source of power in the long-term. It may take a decade to finish a nuclear power plant, and it can not necessarily be sped up.

Pricing effects on non-AI areas

Almost all production in the world relies on electricity. AI is aggressively increasing electricity costs, which will have dramatic inflationary effects on almost all other products. It will also cause price increases for utilities.

Applications

American companies consistently attempt to perform tasks that are beyond the capabilities of current AI, usually in the direction of complete automation instead of productivity increases or human supervision.

- Focusing on agentic approaches when current models do not have sufficient context length.

- Focusing on creating AI art from scratch, such as generating images, videos, and even entire movies from text, instead of creating more controllable tools that can be directed by actual artists, such as style transfer, tools and plugins for existing artist software such as PhotoShop, Blender, and CGI tools, etc.

- Prematurely focusing on humanoid robots as if AI is ready to replace any human in any task.

Although this is primarily due to delusional beliefs about AI capabilities in the near future, it is incorrect to ignore the right-wing and reactionary sentiments here, especially when so many grifters from cryptocurrency and other areas moved straight over to AI as the bubble progressed.

In general, capitalists and the right-wing very frequently fantasize about not only weakening but destroying the working class entirely, while ignoring that labor is the true source of value creation. OpenAI fantasizes about a $20k/year AI PhD worker, where they will magically eliminate the worker while keeping most of the wages as profit, without any competition hurting their margins. AI researchers and grifters online frequently theorize about a post-AI world, where no jobs are available and anyone without sufficient capital is basically doomed. They look at this without fear, but with excitement, and eagerly contribute to building this future, while not worrying about the economic aspect, likely because they think that they are not part of the vulnerable population.

For art, this is particularly visible. The “culture war” delusions of the right wing are out of scope of this text, but I will go into it briefly. The right-wing frequently claims that art is left-wing due to corporate endorsement, but not due to capitalism or due to consumer interests. They believe that corporations go against the “silent majority” (in reality, an extremely vocal minority) not for profit but for nefarious reasons (“white genocide”, feminism, the destruction of western civilization, general antisemitic conspiracy theories). In reality, although left-wing views are actually very common among their customers, companies such as Disney frequently discourage left-wing themes in their entertainment, against the will of their left-wing workers.

Art is inherently a creative process, that requires out-of-the-box thinking. The “blue-haired artist” is not just a made-up stereotype; left-wing people are inherently more likely to have the creativity required. The right wing does not like this whatsoever, and fantasizes constantly about eliminating the industry entirely, and making art possible without creativity, which is somewhat of a contradiction.

Summary

American AI investment ignores the potentially exponential price reductions in GPUs that will happen over time, and ignores the linear price reductions/supply increases in electricity that will happen over time.

Probably due to the American economy’s extreme focus on finance and software (read: monopolization and rent extraction), American AI investment also ignores the physical limitations of automation, which can not simply be exponentially improved the way that hardware can.

American AI investment assumes that human or super-human intelligence will make up for all of this, while ignoring:

- the fact that evolution took 1 billion years on a planetary scale to reach the same outcome.

- the physical limitations of automation.

- the fact that knowledge comes from real-world experience, meaning that it is also dependent on physical output, material conditions, and production in general.

It is biased by:

- The past decades of financialization and software-monopolization in the US economy.

- Right wing fantasies about completely disempowering workers.

Comparing China and the USA

China has been continuously building electrical capacity over time, probably already has the electricity needed for AI, and will have more electricity in the future.

China is building real competitors for Nvidia and TSMC, which avoids monopoly and geopolitical interference, and takes advantage of the benefits of competition.

China is focusing on practical applications of AI, knowing that it will naturally improve over time as computation costs reduce. This allows electricity, skilled human labor, and compute to be used in areas that are more relevant today.

China is building the majority of the robots in the world, and is continuing to expand on this. When AI is truly capable of robotics, the robots will be available for this.

Overall, China is taking a much more logical approach, thanks to its resistance to the disorderly expansion of capital and general opposition to software monopolization.

Left-wing mistakes and miscellaneous info

There are a few other topics I wanted to discuss that would dilute the message of the previous sections, so I will go into detail about them here. They are common leftist mistakes I’ve seen online, although some are not specific to leftists.

Stochastic parrots

Leftists frequently think that AI is a parrot that has zero intelligence. As discussed previously, it does have intelligence, and that intelligence is aligned with human work, so it is more visible and useful than it would be in an animal.

Complaints about water usage, electricity usage, and general costs of AI

AI in the US is certainly a bubble. But it is incorrect to think that AI should not be used at all, or that it is a significant factor in climate change.

Water usage is insignificant, and is misunderstood because of the small amount of water directly used in daily life. While humans may use less than 100 gallons of water a day directly, the indirect costs from industry and agriculture are orders of magnitude more. Both the water and electricity costs of AI are insignificant compared to the value that can come from inference. Furthermore, the water usage metrics that are commonly shared include cooling, where the water is used to cool the data center and then released back to the source. This can have ecological effects, but is overall insignificant compared to water usage in agriculture and industry, where the water is used up or contaminated.

If America had a rational approach to AI similar to China, these costs would be insignificant.

Aggressive endorsement of intellectual property and anti-automation views.

Although Marxists should care about workers, they should not be on the side of intellectual property or anti-automation. If AI was used properly, it would increase the productivity of artists, which under capitalism would mean increased unemployment and reduced wages. But Marxists should not be against automation, they should be against capitalism, and they should be against right-wing distortion of AI, which causes bad economic planning by irrationally focusing on eliminating labor immediately rather than working with existing labor to improve productivity.

“Evil” AI

There are many theoretical ideas about superintelligent AIs “misunderstanding” human requests. For example, a superintelligent AI is told to minimize world hunger, and it does this by killing all humans, which ensures that zero humans can ever be hungry.

In my opinion, this is a bit clueless. How can a superintelligent AI “misunderstand” a request? Even a human would know to read between the lines and understand the implication: that we want to improve food supply and distribution rather than killing hungry humans, and that we are trying to help humans, not harm them. Human language contains human knowledge and can not be separated from it. Superintelligence and misunderstanding are contradictory.

Again, lets see what Stalin says about human language:

Accordingly, language and its laws of development can be understood only if studied in inseparable connection with the history of society, with the history of the people to whom the language under study belongs, and who are its creators and repositories.

In other words, this means that understanding humanity is required for understanding language. Since AI today is primarily trained on language, and language is by far the best source of data because it is distilled human knowledge, it is extremely likely that a superintelligent AI would act like an extremely intelligent human.

Of course, humans can be still be evil, but the danger would be significantly reduced in this case, especially for scenarios where the AI misunderstands a human instruction or perspective. Also, most evil in humans comes from things that could be considered mental issues or mental disorders, such as sociopathy, fear, a lack of empathy etc. Overall, the owners of a human-level AI would be far more dangerous than the AI itself.

Memorization and hallucinations

A lot of the misunderstanding about hallucinations comes from a misunderstanding of how AI is built. AI is trained to predict the next word during pretraining, and solve tasks during alignment and post-training. A lot of the time, there is not an obvious answer, and the data may only have an answer (which could be of varying quality), not the best answer. Basically, it is trained to guess as well as it can. If it was not able to do this, or was aggressively discouraged from doing so, it would struggle to train or be useful, because it would refuse a lot of the time. Both for training and for usage, it is more useful for the AI to guess, and the AI is not intelligent enough to know when to guess and not to guess. This causes the hallucinations to be so prevalent.

Also, as discussed previously, understanding language requires understanding humans, and an AI that purely worked off memorization would struggle to form coherent sentences at all. Even if it does not have human intelligence, it still has some intelligence, and the hallucinations stick out to us because we do not realize the huge amount of intelligence required to answer at all.

Economic effects of AI

Human level intelligence is unlikely. What is more likely in the near future is the elimination of a significant portion of white collar and manual labor, meaning >40% of jobs, but not 100% of jobs. In other words, it is effectively automation, and traditional Marxist economics already mostly explain the economic effects.

People fail to recognize that major automation and productivity improvements have already happened many times over. The invention of agriculture 10,000 years ago is what made society possible, as not all humans had to hunt and gather food to survive. In other words, even 10,000 years ago humanity was dealing with the automation of a significant portion of society’s labor. This was accelerated with capitalism and the industrial revolution, where productivity significantly and rapidly increased.

Before capitalism, there were obvious reasons for people to struggle to some degree. After capitalism and the industrial revolution, which massively increased productivity, why do humans still struggle? Because capitalism extracts as much wealth as possible. Why does it not extract even more? Because labor still produces all wealth, and the human population buys all products, of which the vast majority are workers. In other words, workers are both the supply and the primary (99%) demand for capitalism.

If capitalists do not provide their workers the means to survive, they will not have labor. If capitalists do not provide their workers the means to purchase their products, they will not have customers. Although capitalists like to fantasize about workers being powerlessly run over without resistance, this is not the case. When workers are pushed to the limit, they either have “nothing to lose but their chains” and fight back, or inflation and deflation, either natural or due to a central bank, will cause a correction. To put it simply, when you think “40% of labor will be automated”, you should think “tendency of the rate of profit to fall” and “traditional Marxist economics”.

Another mistake is thinking that the speed of this will be very quick. That is not the case historically, whether it be the industrial revolution, steam engines, semiconductors, robotics, the internet, etc. Companies frequently take decades to implement automations, companies frequently have minimal competitive pressure, supply chains can not significantly drop prices until nearly the entire chain is automated, and of course, physical and material requirements exist (robots, equipment, etc) and are the most significant limiting factor.

Economic effects of human-level AI

What about human-level AI? Marxism is uniquely suited to understanding this. Whereas most capitalist economic theories have a material interest in hiding the source of profit and value, Marxism does not. Marxism says it loud and clear: all created value comes from labor. A fully-automated competitive market with zero labor costs will tend to move towards zero prices outside of natural resources and input costs.

Humans can do physical and mental labor. Robots can already replace physical labor, but can not replace intelligence. If AI replaces human intelligence, labor will be automated, it will automatically scale with natural resource inputs, of which many such as electricity are effectively infinite in the short-term, and the concept of “value” will break down (of course, capitalism is not competitive in reality). This is still not that different, as the goal of society is to serve humans. So either the welfare state placates humans, and capitalist aggression for profits (monopolization, financialization) is contained, or there is revolution. Human-level AI should technically be able to beat socialist revolution, but capitalist governments are never rational, so I personally think it is unlikely. I also don’t believe that human-level AI is likely in the first place so I will not go into it further.

Using idealism when discussing AI (Conclusion)

AI is discussed in an idealist, almost mystical way, by everyone, whether it be AI researchers or leftists. As you can probably tell, the overall goal of this text is to counter this tendency and highlight the relevance of materialism and traditional Marxism in this area, and hopefully increase their usage in the future.

Because the vast majority of human jobs do not require human intelligence. For example, manual labor. Manual labor typically involves moving items from one place to another, searching for items, moving around an environment, etc.

This cannot be true if

Evolution is the true source of human intelligence. An 18-year-old human has 18 years of human experience, and 1 billion years of evolutionary experience.

Within those 1 billion years of evolutionary experience came the experience of interacting with objects in the most primitive way, which is also part of human intelligence.

I agree with the general idea of AI being unable to be “intelligent” as we humans are “intelligent”, but I believe it is more relevant to say that this is because intelligence is not a real metric, through experience we can gain multiple skills that allow us to interact with the world around us, but we cannot really turn all of those skills into a single metric (and where that has been tried, it only served to measure one’s familiarity with the testing method/language/culturally valued skills).

Knowledge is not found inward. The idea of a “know-all” AI that purely iterates internally is invalid.

This is true, i just wanted to highlight it because it reminds me of how (neo)rationalists think, they happen to be the biggest supporters of AI.

Within those 1 billion years of evolutionary experience came the experience of interacting with objects in the most primitive way, which is also part of human intelligence.

Human intelligence goes far beyond this. Almost all animals are capable of this behavior to some degree, with some going as far as using tools.

There are also a significant number of animal species that have an even stronger intuitive knowledge of this than humans. For example, a monkey knows how to swing through trees at a rapid pace. And yet, we know that humans are still more intelligent. This is an example of alignment: these animals are missing a broad range of human knowledge, but have a large amount of knowledge within certain areas (intuitive physics, body coordination).

A monkey has great intuitive physics and body coordination knowledge, but they can not speak English, closely follow instructions, or write code. Does this mean that AI is smarter than a monkey? No. AIs are able to do this because they are more closely aligned with the skills we need and the skills we trained them for. This alignment is what makes them useable at all when they contain so little intelligence.

I agree with this:

we cannot really turn all of those skills into a single metric

I’m not certain philosophically, but I think the existence of a single intelligence metric would inherently mean “know-all”, at least in the way it’s typically thought of. The “single metric” doesn’t exist in reality. Our estimates of this “single metric” are not actually a single metric, but a combination of multiple specific skill metrics: skills that we consider to be valuable in our society (i.e. social skills), and skills that we consider to be “innate to” or “expected of” the human brain (i.e. working memory). Regardless, they are not “general”, and that is why I prefer to use “human” or “super-human” instead of “general” (and a lot of people discussing AI do this as well). This is more accurate to our true intended meaning: an intelligence that contains all human intelligence, and can therefore do anything a human can do (labor).

This is true, i just wanted to highlight it because it reminds me of how (neo)rationalists think, they happen to be the biggest supporters of AI.

Yup, unfortunately for them, they do not have Marxism 😁. Marxism’s understanding of the fact that obtaining knowledge requires doing practice makes it uniquely suited for understanding AI.

(I accidentally deleted my comment so here it is again)

https://redsails.org/artisanal-intelligence/

https://lemmygrad.ml/post/9538048/7200534

(I would also consider:

- using spoiler tags

- as a writing exercise consider cutting down the whole article to one third; it will help with comprehension for the reader to follow your journey and also for one to formulate the concepts one wants to relay more concisely and precisely)

You can un delete a comment by clicking the button again btw

Also yes im also a yapper lol and I spend time trimming down my essays after I write them out. It takes time, haven’t really had good results with AI doing it for me (it wants to edit my voice too), but I try to keep them to the essentials and if people have questions they will ask

Didn’t realise that, thanks for the tip!

I have found forcing myself to re-write what I do more concisely allows me to re-examine what I want to say and re-understand what I am saying; almost a dialectial approach for writing where seeing the material words hones my ideas which in turns shapes what I write.

🙏🔥

🫡

Lol we’ve tried having constructive conversations around AI here for a while, we’ve long since said it was a tool just like any other being developed under capitalism and that capitalism is the problem, acknowledged the current issues surrounding it, and yet you still have people going “lol it sucks” with no nuance

I’m honestly over it, I’ve just given up talking about it at all LMAO I’ll just learn about it in silence and be wow’d by China’s development as usual.

Offloading cognition to a machine is a problem that goes far beyond the capitalist system. A fact evidenced by dozens of studies from across the globe all showing a terrifying decrease in linguistic skill, communication skills, memory, problem solving abilities, and general cognitive atrophy.

Using AI to optimize a spreadsheet? Good use of optimization.

Using AI for research, “summarizing” texts, or as a google search? Uh oh.

There has been studies about the effects of search engines on memory before, which I believe might be the closest thing to what we are seeing again today. (DOI 10.1126/science.1207745)

How relevant will these changes to our society really be? we cannot fall into believing in the concept of the “denegeration” of society, you are not going to destroy people’s brains in a decade, or even a hundred years, of using a computer tool (!! not to say anything of actual material changes such as pandemics or pollution !!). This is not to say it will have no effect at all, but rather that the effects will be temporary, and will become yet another facet of capitalist society that will disappear with our transition to communism.

A fact evidenced by dozens of studies from across the globe all showing a terrifying decrease in linguistic skill, communication skills, memory, problem solving abilities, and general cognitive atrophy.

I wonder if the 34343th covid variant, declining mental health and austerity in education and erosion of the social fabric is more to blame for this than a LLM.

Sorry, I should have specified that the studies were also directly pertaining to AI usage.

👍🏿

“Because the vast majority of human jobs do not require human intelligence. For example, manual labor. Manual labor typically involves moving items from one place to another, searching for items, moving around an environment, etc.”

Uhhh what? are you sure an AI didn’t write this…

Also reads like someone who hasn’t done a day of manual labor in their life lmao. Gonna tell my buddies from my job at a steel factory that in fact they do not need to be intelligent at all.

Removed by mod

💀

reads like something written by a conservative

like… no? manual labor does in fact require thinking - i should know, i’ve been doing manual labor my whole life

I will admit that my 40% claims are probably overblown. “Manual labor” categorizations probably do not only include the main path in well-defined roles; they probably also include specialized roles, roles that are less defined, and they also probably include some trades, even accidentally.

But there are a large number of jobs in logistics that are quite literally moving items from one location to another. For sortation, this is literal boxes (packages). For picking in a warehouse, it is individual items with varying shapes, sizes, strengths, etc., so there is more intelligence involved, and without a generalized AI solution, a more complex grasping algorithm is required. Are there some specialized roles, such as problem-solving, cleaning, etc? Yes, but the vast majority of workers are not doing those roles, and are expected to hand off those tasks to others to avoid distractions. For example, informing the managers of a spill, informing problem-solvers of missing labels or items, etc.

Instead of having an AI solve all of these problems, it can delegate the same way, meaning that it only needs to identify a spill, missing label, etc., rather than fixing it. When the AI fails to delegate, a worker monitoring the AI can eventually correct the situation.

We are both using anecdotal evidence, me of logistics work at places similar to Amazon, UPS, etc., and you at what potentially could be a much more skilled role. I will give more information about my own anecdotal evidence.

Here is an example of packages being moved between pallets and a conveyor belt. I never worked at a facility big enough to use these, but they are absolutely used in real life.

Here is a pretty well-known example of robots at Amazon handling the “movement” that I was talking about: the associate no longer needs to walk around the warehouse, and can simply focus on the picking (which is the more difficult “grasping” problem that the robots are not good at).

Notice that in both those examples, it is being completely done without large language models. This does not require high intelligence. And think about it: if we can already do this without the AI that is being discussed, is that a positive view of this AI? No. Really my post is extremely pessimistic. I pointed out that the true limiter is the robots themselves, which is visible from the fact that we already have ways of doing this job without AI, but are still not doing it. It does not matter if we have AI when the robot itself costs 200k.

Especially when combined with software engineering and other white collar work, this means that AI could be very valuable, but will not turn the world on its head. It is simply another form of automation, and while it is a big jump, it is not too different from previous jumps. Aside from bad timing and misallocation, that is a big reason why AI investment in America is a bubble.

I also want to point out this:

manual labor does in fact require thinking

It requires far more thinking than any of our artificial intelligence, and requires far more alignment than animals in almost all cases (except for some cases such as farm animals, and these monkeys lol). But does it truly require human-level intelligence? The same human intelligence that discovers quantum physics, goes to outer-space, builds the internet and computers? No. The reason we consider it to be a human task is because there is nothing beneath humans that is suitable. Human intelligence, while it has some benefits, is completely overkill for a lot of these roles. At the same time, AI and algorithmic intelligence is way too low for the role, so we are forced to use humans anyway.

Even if you or others personally prefer this work, and it brings challenge and requires learning new things, it is still overkill for the role. And if you work at a more skilled role, then maybe AI would not be suitable, but it could be suitable in many other areas.

I found YouTube links in your comment. Here are links to the same videos on alternative frontends that protect your privacy:

Link 1:

Link 2:

Link 3:

deleted by creator

Out here asking the real questions.

Honestly, going off of the sheer size, the very neatly ordered sections with bold headers, various hyperlinks, and random lists… I think you know the answer to that question.

Edit: AI bros losing their minds whenever someone says anything about AI that isn’t worshiping the corporate machine will never not be funny. Actual five year old levels of cognition.

…is it really so hard to believe that somebody who made a long post would put the effort into making links and paragraphing properly? It isn’t rational to just assume that every AI opinion you disagree with is made by AI. It’s just bad faith skepticism.

edit: downvote away lol going “iS tHIs AI!?!? 😂😂😂😂😂” on every single post that’s genuinely just trying to talk about AI rationally doesn’t get any funnier the more you do it.

It’s not bad faith skepticism if that is quite literally how AI typically formats it’s long form answers.

You know what, now that I notice it, who puts a “Summary” section in the middle of a post before continuing on as if nothing happened?

Just noticed another “conclusion”, section. OP couldn’t even be bothered to hide where the tokens stopped generating.

why does it matter? Also OP is here, in the comments, interacting with people. So I guess it’s achieved sentience.

A pro-AI person that can’t even bother to write their own pro-AI defense is a little funny.

But, genuinely asking, why do you think it would matter?

Also OP is here, in the comments, interacting with people. So I guess it’s achieved sentience.

Ok? And? Did I say that OP was a machine, or that OP most likely used AI to generate the text? OP can use AI for the main body of the post and then write a few sentences on their own in the comments. I’m confused as to what your argument is.

I don’t think it does, that’s why I asked you.

Why should I bother to read or argue against a text that a writer couldn’t be bothered to write themselves?

The rapid abandonment of reasoning and even basic critical thinking in favour of letting a corporate machine do those for you is both horrifying and depressing.

tbh that also can just mean the person knows markdown pretty well and cares about presentation

What answer do you think is more likely?

probably hand written tbh, not enough em dashes and “not x but y”-ing and from a skim-read it looks like the quotes are actually relevent to the points being made

Em-Dashes were mainly a GPT issue, and most models have moved to using parentheses, which are heavy in this text. The “not x but y” was explicitly coded out with GPT4 too.

Does having “summary” and “conclusion” sections in the text not seem at all interesting? The sections aren’t even coherent, they’re just random AI blurbs, that then jump to a completely unrelated topic.

Sources for many of the quotes aren’t cited at all either.

deleted by creator

It seemed more like a rhetorical question tbh. I thought you were either joking or insulting me, so I didn’t answer. You probably are, but I’ll give you an answer anyway.

AI is nearly useless with Marxism. It is only able to provide basic definitions and sometimes very narrow applications in well-understood contexts, usually contexts that were discussed by famous Marxists in the training data. If it wasn’t useless, I’d use it to filter recent Marxist texts to find high quality ones, I’d translate Chinese texts to English so that I could read them, etc., but I do not trust it to reliably do this.

Anyway, because of that, for writing I never really use it beyond proofreading/verification; I’ve probably only taken text from it (like individual sentences and rewordings) like less than 5 times ever for Marxism. Any Marxism it outputs is just a bunch of cargo-cult dramatic prose that doesn’t really say anything in the end. In the case of this text, it repeatedly says that a weakness of the text is the overdependence on “practice”, meaning that it doesn’t truly understand Mao’s On Practice, the theory-practice cycle, or dialectical materialism in general. And it won’t dare say anything positive about China in most cases lol. That tiny comparison of America and China makes it say that the text is idolizing China.

The wild people in the replies are complaining about the fact that there are multiple conclusions and sections. I’d call them delusional (and honestly I think it flows clearly if you actually read it) but there are actual reasons for this besides neurodivergence. It was originally in different formats:

- It started off as a Q&A-style format where I corrected common leftist mistakes relating to AI, similar to the 3rd miscellaneous section. It was supposed to be a short post, but got very long, so I ended up discussing the majority of it in an intelligence section and the economics section, basically making it a real text. The remaining stuff was either unrelated, or would have diluted those sections, so I kept the remaining stuff in a 3rd miscellaneous section, and summarized the whole text with the same original goal: bring materialism into discussions of AI.

- The intelligence section was originally two parts, with the second part being about AI technical capabilities. This section was way too detailed and technical, explaining each capability: vision, speed, price, short-term memory vs long-term memory vs working memory and how they compare to humans for each one, etc.

- The American bubble part was originally a part of this text meant to be combined with the parts about venture capitalism, monopoly, and reserve currency, but was excluded due to being too detailed and too irrelevant. I also meant to discuss it in another propaganda text about the international reserve currency and US collapse but I abandoned that text entirely.

Basically it was massively shortened, and was not a single post originally.

Since it was a combination of a bunch of different stuff that was reorganized multiple times, I did use AI to find where the flow “breaks” (sudden jumps, cut-off sentences from copy pasting, etc). But as I said, AI is awful at actually writing about Marxism. Although I tried to get assistance from it, it did not suggest any of those rewrite strategies I mentioned, because it doesn’t comprehend how the topics are related.

But afterward, I asked for help with the thesis. It was successful there, so there is 1 sentence in the text that actually was modified from AI. I will show you the process for transparency.

Here is the original:

So far, this text has been very optimistic about the value of AI compared to typical left-wing views. AI does have some intelligence and will soon have a large amount of real-world automation value. This does not mean that AI is not a bubble in America. Even if AI will be worth hundreds of billions of dollars to the economy (which I think is almost guaranteed), American investors have invested more than this, and have done so in a very wasteful way. There is a major limitation to the value of AI: real-life physical input costs. American AI investment is also extremely wasteful because it invests too early and because it aggressively redirects resources from other industries.

I tried to get AI to improve on this, and it gave me this:

While the previous sections established AI’s significant practical potential, this value must not be confused with its current financial valuation in the United States. The American approach to AI is a classic speculative bubble, characterized by investment that far exceeds realistic economic returns. This bubble is driven by two core fallacies: a disregard for the hard physical limitations of automation—such as input costs and resource availability—and a profoundly wasteful strategy of premature investment that aggressively redirects capital and energy from other vital sectors of the economy.

THIS is how AI writes. It sees “Marxism” and starts writing super dramatically. Was it mostly logically correct? Yes, but that is because I provided it an almost-finished version of the text from the post, and it didn’t need to add anything (I wanted it to write thesis-like statements for the transition to the next chapter). If I didn’t provide it the whole text, it would have obviously made something much worse, because its comprehension is worse than its writing abilities. This is one of the best outputs I’ve gotten from AI for Marxism, and its still mediocre, even if it managed to not say anything incorrect.

But the last sentence is salvageable there. So I took it and made this:

This waste manifests as both a premature investment in rapidly depreciating technology and a damaging diversion of essential resources, like energy and skilled labor, from other economic sectors

As you can see, I shortened the sentence a bit, and removed the surrounding/previous parts, but kept a lot of the wording from that sentence. So to answer your question, that sentence’s wording was significantly assisted by AI. The rest was not.

As for your concerns:

I think it’s fair to expect an author to have spent at least as much time writing as they expect a reader to spend reading.

I started recognizing that AI was able to generalize faster before GPT3 came out, probably 7 years ago or so. When combined with strong synthetic data strategies, large pretrained vision networks were capable of learning to classify an image from only a few examples (it used to take hundreds or thousands). Later on, similarity learning methods (for example, triplet loss) were capable of identifying objects from a single example without additional training, although with mediocre accuracy. This shows that when pretrained on a large amount of general data, ai models are able to generalize more quickly to more narrow datasets, and at much higher quality than if they trained on that dataset alone.

I’ll spare you the details, but I started comparing evolution to machine learning once I saw GPT3. And I was a lot more extreme about it, thinking that it would take hundreds of years to catch up at minimum. I thought that the only way to “bypass” evolution would be to train on brain scan/MRI-like data, or similar (basically cloning the brain or something close). In reality, human language is effectively distilled data/embeddings of human knowledge, so it is very effective for bypassing evolution, at least partially.

Last year, I was looking at AI coding approaches, and realized that the traditional conversation format is not ideal. It is effectively a whiteboard interview: the agent can not use IDE features such as syntax checking, it can not run tests, etc. I started making an AI agent, thinking that if I gave the AI the ability to run tests and experiment the way a human would, it would perform much better. Instead of a human pointing out the mistakes, the AI could find the mistakes itself, and it would dramatically improve the final result. But performance was awful. I realized that AI quality drops dramatically if the context length exceeds 10k. This matches with other people’s experience online.

I started the portions of this text late last year (for the other texts I mentioned), and actually started this specific text a few months ago. I abandoned and came back to it repeatedly, and then finally came back and finished it when Karpathy started talking about the AI bubble a few weeks ago, as his arguments matched mine (evolution).

So overall this post was from 7 years or so of observing and working with AI, took a few months to make, and probably took ~100 hours or so of actual direct writing.

To be honest, the complete unwillingness to read is nothing new to leftists whatsoever. People are like “its so long it has to be AI”, “its badly written it has to be AI” like are y’all actually Marxists LOL? The group of people known for writing an absolute fuckton about everything and getting into detailed arguments about minor events from 100 years ago? Hello?

Refusing to read is nothing new. People blame AI now, saying that they don’t know if what they are reading is just slop because it is so easily made. But in my experience, it is usually possible to tell if something is AI within the first 10 seconds of reading it, regardless of length, ESPECIALLY if you know the subject area. It really seems like the same old cope I saw in online discussions 5+ years ago. But I deal with AI labeling as a job, so I am probably better at detecting it I guess.

Overall, this comment section makes me wonder if AI is better at Marxism than most human Marxists. Years ago, I noticed that like 5% of self-described Marxists actually understood dialectical materialism, the rest just blindly believed it. I see that that hasn’t changed.

A Reddit link was detected in your comment. Here are links to the same location on alternative frontends that protect your privacy.

First, I must submit that I am prejudiced against AI, LLMs, and anything under that umbrella.

Next, I will admit that I did not read your entire post- as I found it very difficult to follow. I can say with confidence that it was not written by AI though, as an LLM would have made it a little more coherent.

I did however read the sections I thought most relevant.

Now let me say, I do not care if AI is intelligent. I do not care if AI is bad for the environment. I do not care that AI has the potential to replace “jobs”. I do not care if AI is evil or not.

But what makes me care, and what seems to be missing from your post, is- the point.

Let’s say the world’s AI is ultra intelligent, costs 1 watt an hour to run, and is subserviently friendly- and while we’re at it, why not say that the world has passed universal basic income and the lack of jobs are no longer a concern. What’s the point?

What are we freeing up so much time for? Unfettered consumption?

You speak of evolution, you make some interesting points and comparisons in regards to it. Did we not evolve in the face of struggle? What of our species if we take struggle away? Are we to become the people depicted in WALL-E?

AI will destroy critical thought and interpersonal relationships, like the internet destroyed the community and the aircraft destroyed the family.

These facets of technology may serve to benefit the masses under communism as any tool might. But only if they are completely reconceptualized.

This community is low quality to say the least, so I am not really interested in continuing to discuss this topic. I will try to make this short.

What’s the point?

Automation and the increase of productivity. That is the point. That is the point of the industrial revolution, that is the point of the steam engine, that is the point of electricity, of motors and robotics, of semiconductors and computers, of the internet. Primitivism and idealism is reactionary. If AI is used in a harmful, non-productive way, it is an incorrect usage. If AI is used in a helpful way, it is a correct usage. If AI is used in a productive but harmful way, such as making workers unemployed, the problem is the capitalist economic system, not AI, and socialism is the solution.

What of our species if we take struggle away?

To put it bluntly, this is fascist sentiment. The goal of production and progress is to meet the needs of people, and make their lives better. We do not exist to struggle, we struggle to build a world where this will not be necessary.

AI will destroy critical thought and interpersonal relationships, like the internet destroyed the community and the aircraft destroyed the family.

Is this not GenZedong? Am I in some ancom debate subreddit? I genuinely think I might be losing my grip on reality LOOOL

Do not worry about reading this text, read Lenin and Stalin instead.

low quality community? i think people were pretty nice to you considering your insulting rhetoric to blue collar workers and rambling nonsense. you are delusional.

I did not insult blue collar workers. I said that human intelligence is overkill for manual labor, and is only used due to a lack of an alternative.

You claim that the position you oppose is “rambling nonsense”, and yet you are unwilling or unable to make a legitimate critique. Instead of investigating or staying silent, you and others knowingly straw-manned my position and distorted it, intending to portray me as a conservative who thinks that workers who do manual labor are unintelligent. Why? Because you have a tribalist position on AI and felt the need to attack an opponent that was unaligned with you, and you prioritized this urge over the correctness of yourself, me and other participants. This is not “pretty nice” behavior, this is liberal behavior, and as a communist, I am expected to call it out and attack it.

To indulge in personal attacks, pick quarrels, vent personal spite or seek revenge instead of entering into an argument and struggling against incorrect views for the sake of unity or progress or getting the work done properly. This is a fifth type.

To be aware of one’s own mistakes and yet make no attempt to correct them, taking a liberal attitude towards oneself. This is an eleventh type.

lol like i said, you are delusional and sound like you’ve never worked a manual job in your life, no one cares what you have to say if you keep trying to defend your ridiculous phrases and cannot even have a grain of self criticism. read the room.

All I see is “liberalism”, “strawman”, “personal attack”, “tribalism”, and “hypocrisy” written all over the walls repeatedly, is this correct?

To be aware of one’s own mistakes and yet make no attempt to correct them, taking a liberal attitude towards oneself. This is an eleventh type.

Do not say the phrase “self criticism”, you have no right to do so.

lol

Glad to hear that I am both fascist and ancom adjacent in my views, truly groundbreaking times we are in.

AI in a non-harmful, productive capacity precludes it from being actually intelligent, lest we let it cause us to waste away.

Pointing out what has been studied and well documented (the negative affects of the internet / similar technologies) is not primitivism.

One thing that has been pointed out in my industry is the automation driven degradation of indispensable skills. Things have gotten so bad that the FAA sent out a bulletin begging pilots to hand fly aircraft so that they can maintain their aeronautical aptitude.

A solution you might present and one that is often presented is more automation, pilotless commercial aircraft even. The problem is that these systems much like LLMs love to hallucinate. Autopilot loves to attempt suicide on commercial aircraft. (Commercial aircraft are subject to insane hours and duty cycles exposing them to much more wear than the drones we’re used to seeing.)

I bring this all up as real world comparison of what I expect us all to experience when we each are given our own mental autopilot. Degradation of mental skills.

I am not aware of the exact link you see between my question regarding our species and fascism. But I’ll surrender that it could have been phrased better.

Struggle is in all facets of life, not just what’s physical and aesthetic (which I imagine is what fascists are most concerned with).

I’m not making a point about “survival of the fittest” or something along those line when I speak of struggle. I’m speaking in regards to struggle in interpersonal relationship management, the source of most frustration for many. And I would say that it’s valid to be concerned of struggle like that being removed from our lives in place of two AI talking to eachother when we already have people using LLMs to solve their interpersonal conflicts for them. Another skill that will degrade without struggle.

We already have an overabundance of production in the world, those bereft of the things they need are in that position because in our overindulgence we have decided to take from them as well.

Wanting to restrict AI given it’s purported trajectory in capability is more akin to wanting denuclearization than advocating for the cessation of all things post industrial.

Let me ask anyone reading this one question; Is the theory you consume the backbone of actual substantive practice from you as the authors intended, or is the consumption of theory just a hobby and it’s discussion a way for you to feel a part of a community?

With regards to the harm that may come from automation, there are two positions (for and against) you could take with regards to real automation:

- Automation is useful, and good, but we should also prepare for the negative consequences, and try to mitigate them.

- Automation has significant negative consequences that exceed the benefits, and is therefore harmful.

If the position is “While AI is definitely useful, we should be wary of slop effects, such as harm to the education system, and the harm that AI spam could cause to the internet”, then that is acceptable.

If the position is “AI needs to be stopped, is bad because it stops struggle (???), and does more harm than good”, then that is a toxic and luddite position. How can it be fascist? By appealing to tradition, by appealing to nature, and by appealing to capitalist, puritanical and protestant work ethics.

The overarching goal of my text was mentioned at the end: to bring materialism, realism, and logic back into the left-wing discussion of AI. Could I mention the negative effects of AI such as slop? Yes. But the left is quite aware of those positions already. It is NOT aware, even slightly, of how to view AI as automation; this comment section is proof. There were some discussions in my post about right-wing and capitalist delusions, such as singularity and near-future human intelligence, but this was to bound the acceptable range of positions: people should not believe in some magical singularity, nor should they think that AI is completely and utterly useless, as many people on the left genuinely, truly think. I also discussed some aspects of the capitalist and right-wing influences on the direction of AI: how hatred of artists and workers cause capitalists to try to “skip ahead” beyond what is possible with current technology, such as by trying to create entire pictures and movies from scratch (natural language instructions), with no artist involved.