DuckDNS has long enough latency (over 2000ms) where Google Assistant can’t connect. I moved to FreeDDNS and my Home Assistant issues went away.

DuckDNS has long enough latency (over 2000ms) where Google Assistant can’t connect. I moved to FreeDDNS and my Home Assistant issues went away.

Silksong might be one of the “easiest” ones if I ever did a RenoVK. Basically, you check the swapchain size, and any 8bit texture that the game tries to build that matches that resolution gets upgraded to 16bit. And done. That alone will get the SDR layers to stop banding. (We actually do 16bit float because we want above SDR level brightness, but 16uint would be a perfect, less problematic banding fix).

I might look at vkBasalt. That’s basically how ReShade ended up building an addon system. You have to be able to inject shaders, create textures, and monitor backbuffer to do postprocess. Instead of just doing it at the end, it allows us to listen for render events and act accordingly. That’s the basis for most our mods. Every game will use DX/GL/VK commands so it’s much easier to tap into that instead of compiled CPU code.

I wrote the RenoDX mod if you’re talking about that. I don’t think there’s anything like Reshade’s addon system for Linux games. We’ve done OpenGL and Vulkan mods but that still relies on intercepting the Windows implementation. Silksong primarily needs a 16bit float render to solve most of its banding, but not sure how you can do the same on Linux.

We avoid per-game executable patching intentionally, but sounds like that would be the best choice here. Getting the render to 16bit would solve most banding, but you’d still need to replace shaders if your goal were HDR (or fake it as a postprocess with something like vkBasalt).

We would have also accepted a bluer yellow.

The point is to show it’s uncapped, since SDR is just up to 200 not. It’s not tonemapped in the image.

But, please, continue to argue in bad faith and complete ignorance.

From understanding my old GameBoy that had 4 AA batteries in alternating rotation, that had 6V (1.5V each battery). Chaining positive and negative together increased the voltage.

Since this has them pointing both up, it’s just 1.5V but it’s as if you put a half sized battery.

Basically, the same, just less amperage because of a smaller battery (if compared to 2 of the same).

tl;dr: same, but half capacity.

He should argue his grievances to some sort of tribunal presided over by one or several judges in which legal issues and claims are heard and determined: one specifically that specializes in mammalians of the marsupial sort.

This is a trash take.

I just wrote the ability to take a DX9 game, stealthy convert it to DX9Ex, remap all the incompatibility commands so it works, proxy the swapchain texture, setup a shared handle for that proxy texture, create a DX11 swapchain, read that proxy into DX11, and output it in true, native HDR.

All with the assistance of CoPilot chat to help make sense of the documentation and CoPilot generation and autocomplete to help setup the code.

All in one day.

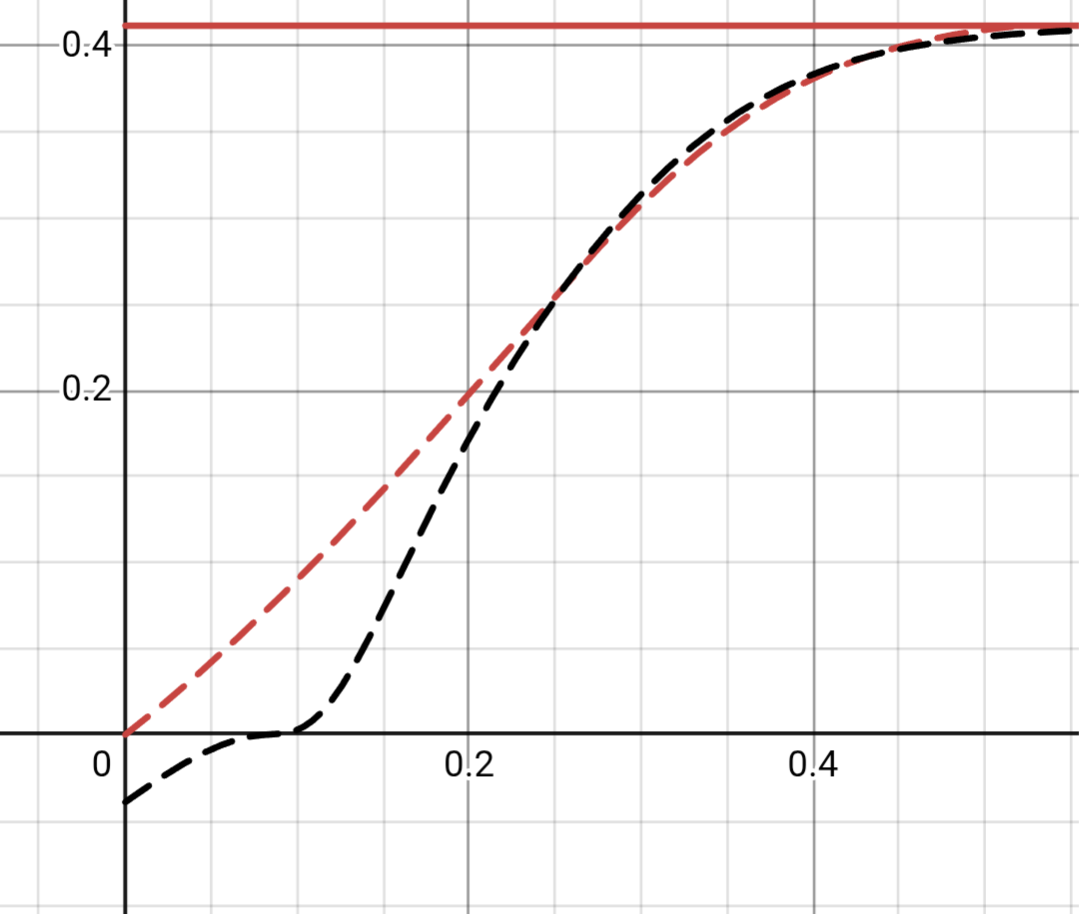

Helm Dawson tonemapper is a filmic tonemapper built by EA years ago. It’s very contrasty, similar to ACES (What Unreal mimics in SDR and uses for HDR).

The problem is, it completely crushes black detail.

https://www.desmos.com/calculator/nrxjolb4fc

Here’s it compared to the other common Uncharted2 tonemapper:

Everything under 0 is crushed.

To note, it’s exclusively an SDR tonemapper.

I’ve found this tonemapper in Sleeping Dogs as well and when modding that game for HDR, it was very noticeable there how much it crushed. Nintendo would need to change the tonemapper to an HDR one or, what I think they’ll do, fake the HDR by just scaling up the SDR image.

To note, I’ve replaced the tonemapper in Echoes of Wisdom with a custom HDR tonemapper via Ryujinx and it’s entirely something Nintendo can do. I just doubt they will.

“If the answer is yes, you should be incredibly proud of yourself.” (My guess)

I decompiled Echoes of Wisdom. It uses the pretty horrible Hejl Dawson tonemapper. Pretty sure the HDR is going to be fake inverse tonemapping.

DidYouKnowGaming knows me so well they’ve started titling their videos: 3 hours of GameCube facts to fall asleep to.

Lan ports have been standard, thankfully, since the Switch OLED.

Had this exact thought. But number must go up. Hell, for the suits, addiction and dependence on AI just guarantees the ability to charge more.

(X) Doubt.

All that matters is loyalty. They may vote in a certain manner for the majority of cases, but what matters are the critical cases of interest to their overlords.

Sure, but what does ChatGPT say?

Only reason I haven’t modded HDR for this game is because it’s DX9 and a pain to mod. (I already did GTAV - Enhanced and GTA Trilogy Remastered since it’s UE). If they make a new port for PC it’ll be able to complete the set.

Definitely not. NoJS is not better for accessibility. It’s worse.

You need to set the ARIA states over JS. Believe me, I’ve written an entire component library with this in mind. I thought that NoJS would be better, having a HTML and CSS core and adding on JS after. Then for my second rewrite, I made it JS first and it’s all around better for accessibility. Without JS you’d be leaning into a slew of hacks that just make accessibility suffer. It’s neat to make those NoJS components, but you have to hijack checkbox or radio buttons in ways not intended to work.

The needs of those with disabilities far outweigh the needs of those who want a no script environment.

While with WAI ARIA you can just quickly assert that the page is compliant with a checker before pushing it to live.

Also no. You cannot check accessibility with HTML tags alone. Full stop. You need to check the ARIA tags manually. You need to ensure states are updated. You need to add custom JS to handle key events to ensure your components work as suggested by the ARIA Practices page. Relying on native components is not enough. They get you somewhere there, but you’ll also run into incomplete native components that don’t work as expected (eg: Safari and touch events don’t work the same as Chrome and Firefox).

The sad thing is that accessibility testing is still rather poor. Chrome has the best way to automate testing against the accessibility tree, but it’s still hit or miss at times. It’s worse with Firefox and Safari. You need to doubly confirm with manual testing to ensure the ARIA states are reported correctly. Even with attributes set correctly there’s no guarantee it’ll be handled properly by browsers.

I have a list of bugs still not fixed by browsers but at least have written my workarounds for them and they are required JS to work as expected and have proper accessibility.

Good news is that we were able to stop the Playwright devs from adopting this poor approach of relying on HTML only for ARIA testing and now can take accessibility tree snapshots based on realtime JS values.

If you’re talking browsers it’s poor. But HDR on displays is very much figured out and none of the randomness that you get with SDR with user varied gamma, colorspace, and brightness. (That doesn’t stop manufacturers still borking things with Vivid Mode though).

You can pack HDR in JPG/PNG/WebP or anything that supports a ICC and Chrome will display it. The actual formats that support HDR directly are PNG (with cICP) and AVIF and JpegXL.

Your best bet is use avifenc and translate your HDR file. But note that servers may take your image and break it when rescaling.

Best single source for this info is probably: https://gregbenzphotography.com/hdr/