- cross-posted to:

- KasaneTeto@ani.social

- cross-posted to:

- KasaneTeto@ani.social

We’re all Teto here?

AI moment.

woaaaaaaaa deepcut chain of thought reference - no wayyyy–!!!

i - cannot - believe it ----- next up yall start talkin bout the lonely left-out mixture of experts expert whos never used for anything >o< woaaaaa thatd be crazy–

or - or- or- or- about how supersparse MoEs appear much better in inference performance and training procedures - just like the brain—

or or or orrrrr. ----orororor----- - - - - or how like - laying out specific reasoning traces as text determines surprisingky accurately how any given model reaches a conclusion, again, verx similar to what humans in higher ages having a harder time to learn new stuff as they appear to have more rigid reasoning patterns which tend to accell only in very narrow domains -

orrrrrrrrrrrrrrrrorrrororrroroooorrrrorororrr or or or o r or rooroo4 ororororor or or or or or or maybe about how pretraining data appears to define a models reasoning ability and quality much more than the actual reasoning post-training does----- similar to how some peeps have a much more difficult time aquiring knowledge than others do, as they may simply have specialized their mind into a different subset of abilities-

orrrrrrrrrrrrr how training a model with inherent knowledge and abilities is muuuuuuch more expensive than actually running it does —just like how evolving any species from the very beginning towards their current state took millions or trillions or iduno how many failed attempts to now have some human which struggles with their new environments — (somthin we would call a distrobutional shift in model training, but noooooo thats a totally different thing cuz humns totally diffrt)

orrorror how like - the number of parameters to actual performance equasion in not linear, but logarithmic, implying that scaling a given model to a larger size returns much higher boosts to intelligence than doing so on a larger scale with a larger model does— maybe implying, that also in real life, there is an optimal brain size at whivh deminishing returns yields no substantial gain over its previous smaller iteration???

thatd be crazy!! :ooooooooo

I have absolutely no idea if this is defending AI or not.

u actually got it exactly right, its neither <3 <3

i like you, youre funny and smort

pla dont,… say smort.,.,. anyway-

thank u, i try my best-… but i also kinda try not being the LM peep pn lemmy,… not very good at that tho-.,.

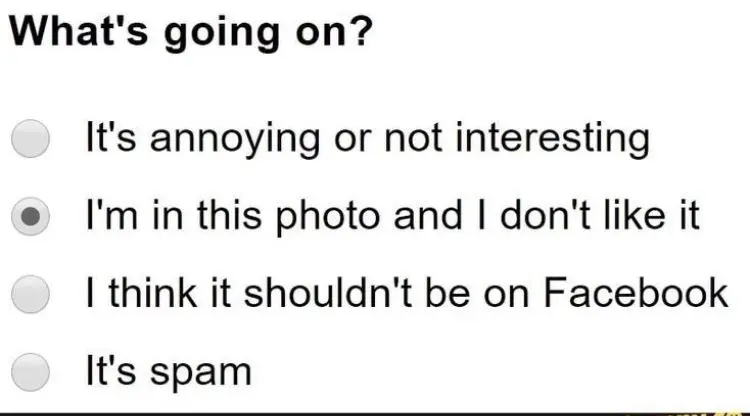

yeah, claude seems to do that too, it’s annoying