Want to wade into the spooky surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. Happy Halloween, everyone!)

KDE showing how it should be done:

https://mail.kde.org/pipermail/kde-www/2025-October/009275.html

Question:

I am curious why you do not have a link to your X social media on your website. I know you are just forwarding posts to X from your Mastodon server. However, I’m afraid that if you pushed for more marketing on X—like DHH and Ladybird do—the hype would be much greater. I think you need a separate social media manager for the X platform.

Response:

We stopped posting on X for several reasons:

- The owner is a nazi

- The owner censors non- nazis and promotes nazis and their messages

- (Hence) most people who remain on X or are clueless and have difficulty parsing written text (one would assume), or are nazis

- Most of the new followers we were getting were nazi-propaganda spewing bots (7 out of 10 on average) or just straight up nazis.

Our community is not made up of nazis and many of our friendly contributors would be the target of nazi harassment, so we were not sure what we were doing there and stopped posting and left.

We are happy with that decision and have no intention of reversing it.

The follow-up’s worth mentioning too:

It’s interesting they’re citing specifically DHH and Ladybird as examples to follow, considering:

https://drewdevault.com/2025/09/24/2025-09-24-Cloudflare-and-fascists.html

common KDE W

Think some of the KDE people are old school punkers so might not be a big shock.

after fedora announced that ai contributions are cool, this is really refreshing

back in ~my~ day cartel oligarchs would meet in secret to fix prices for products you cannot live without, then get a ton of profit and swim in money, while backstabbing one another at any opening with blackmail and assassins and whatnot. sometimes they’d fund a library or something to pretend they were philanthropists.

cartels these days make pretend products that nobody wants, then promise they’re going to “invest” one quadrillion dollars on the other oligarch’s company to create more virtual husbandos, and the other company in turn promises they’re going to buy one quadrilllion dollars of “compute” from the first company, so that both can report one quadrillion dollars of “growth” for doing absolutely nothing. like who are they even trying to impress here. then the oligarch hires people to pretend he can play Diablo. what happened to honest, salt-of-the-earth exploitation of the masses, huh. the boot stomping on my face is all cheap plastic nowadays. they gotta replace it every 3 years and the new model doesn’t even fit my face anymore. they don’t make cartels like they used to

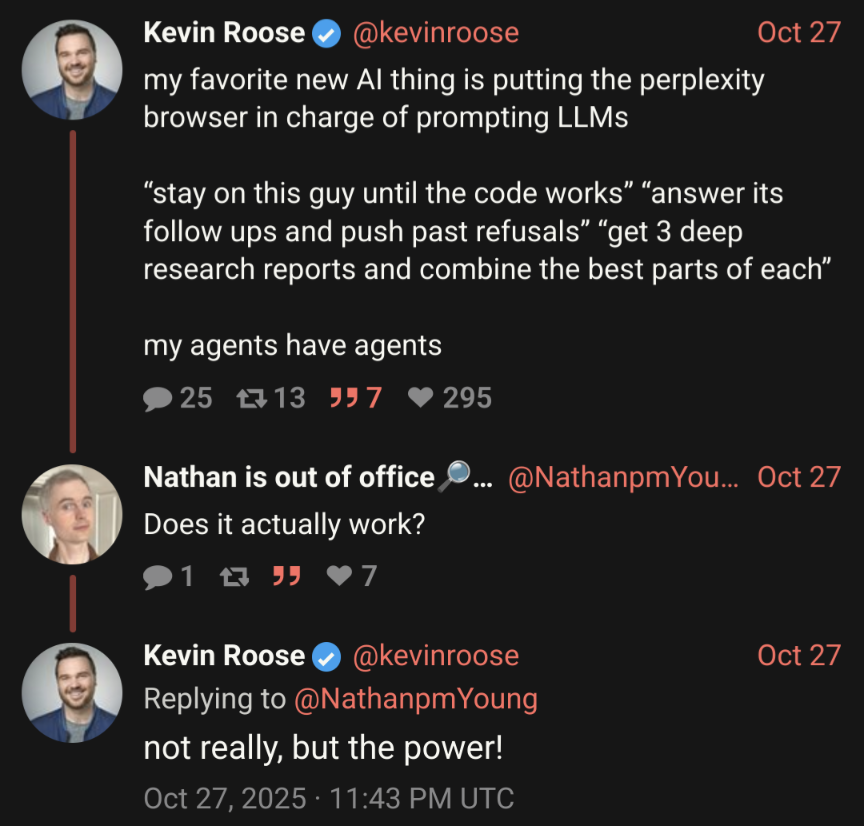

That’s like connecting a baking oven to a fridge and then marveling at the power of all the heat exchange

AI was capitalism all along etc etc

Moar like power the butt.

Ugh. Hank Green just posted a 1-hour interview with Nate Soares about That Book. I’m halfway through on 2x speed and so far zero skepticism of That Book’s ridiculous premises. I know it’s not his field but I still expected a bit more from Hank.

A YouTube comment says it better than I could:

Yudkowsky and his ilk are cranks.

I can understand being concerned about the problems with the technology that exist now, but hyper-fixating on an unfalsifiable existential threat is stupid as it often obfuscates from the real problems that exist and are harming people now.

it often obfuscates from the real problems that exist and are harming people now.

I am firmly on the side of it’s possible to pay attention to more than one problem at a time, but the AI doomers are in fact actively downplaying stuff like climate change and even nuclear war, so them trying to suck all the oxygen out of the room is a legitimate problem.

Yudkowsky and his ilk are cranks.

That Yud is the Neil Breen of AI is the best thing ever written

about rationalismin a youtube comment.“I can read HTML but not CSS” —Eliezer Yudkowsky, 2021 (and since apparently scrubbed from the Internet, to live only in the sneers of fond memory)

It’s giving japanese mennonite reactionary coding

there is now a video on SciShow about it too.

This perception of AI as a competent agent that is inching ever so closer to godhood is honestly gaining way too much traction for my tastes. There’s a guy in the comments of Hank’s first video, I checked his channel and he has a video “We Are Not Ready for Superintelligence” and it got whopping 8 million views! There’s another channel I follow for sneers and their video on Scott’s AI 2027 paper has 3.7 and million views and a video about AI “attempted murder” has 8.5 million. Damn.

I wonder when the market finally realises that AI is not actually smart and is not bringing any profits, and subsequently the bubble bursts, will it change this perception and in what direction? I would wager that crashing the US economy will give a big incentive to change it but will it be enough?

I could also see the response to the bubble bursting being something like “At least the economy crashing delayed the murderous superintelligence.”

I’m betting on a new version of the “stabbed in the back” myth. Fash love that one.

@o7___o7 @ShakingMyHead it’s a cult: it can never fail, it can only *be* failed

“We would have been immortal God-Kings if not for you meddling (woke) kids!”

I wonder when the market finally realises that AI is not actually smart and is not bringing any profits, and subsequently the bubble bursts, will it change this perception and in what direction? I would wager that crashing the US economy will give a big incentive to change it but will it be enough?

Once the bubble bursts, I expect artificial intelligence as a concept will suffer a swift death, with the many harms and failures of this bubble (hallucinations, plagiarism, the slop-nami, etcetera) coming to be viewed as the ultimate proof that computers are incapable of humanlike intelligence (let alone Superintelligence™). There will likely be a contingent of true believers even after the bubble’s burst, but the vast majority of people will respond to the question of “Can machines think?” with a resounding “no”.

AI’s usefulness to fascists (for propaganda, accountability sinks, misinformation, etcetera) and the actions of CEOs and AI supporters involved in the bubble (defending open theft, mocking their victims, cultural vandalism, denigrating human work, etcetera) will also pound a good few nails into AI’s coffin, by giving the public plenty of reason to treat any use of AI as a major red flag.

I made it 30 minutes into this video before closing it.

What I like about Hank is that he usually reacts to community feedback and is willing to change his mind when confronted with new perspectives, so my hope is that enough people will tell him that Yud and friends are cranks and he’ll do an update.

I dunno about that, recent knitting drama took a while to clear up, and I’m not sure if AI sceptics are as determined a crowd as pissed off knitters.

(Tl;dr on the drama: there was video on SciShow about knitting that many (myself included) felt was not well researched, misrepresented the craft, and had a misogynistic vibe. It took a lot of pressure from the knitting community to get, in order, a bad “apology”, a better apology, and the video taken down.)

Employee at ‘plagiarism company’ defending transition to ‘plagiarism + pushing sex content onto children company’ insists that the reason they are pushing smut slop onto kids is due to their passion for creativity.

S-tier big brain ai safety researcher chimes in:

Masterful gambit, sir. Why didn’t we consider the fact that “automating all labour would produce more revenue”?

I realize it’s been poisoned since/by coiners, but god I hate that usage of “democratize”

Just pretend that it’s coming from a different root word “mocratize” meaning the opposite of whatever the fuck crypto is doing

could I, like, not do that? would suit my needs better. kthx

thanks for asking.

Noof course

it’s democratic if i couldn’t do it yesterday but i can do it today, even though it’s not the same in any meaningful way

edit: in all seriousness, it’s disgusting the way they are pretending there is some noble intention behind any of this.

yeah it’s very much an intentional usage of poisoned language to construct a targeted outcome

but arrrrrrgh

As usual the libertarians are saying “democratize” when they mean “commodify”.

What professional athlete is a) working for OpenAI and b) wants to turn Sora into the bottomless fountain of goon?

A horny one.

And just as importantly, free-sprinted

Oh God my brain is so used to turning typos into likely intended words that I missed “free-sprinted”, which I’m going to guess in this context involves being athletic and horny and bottomless and possibly suffering from protein-powder-induced lead poisoning.

That might explain why copilot is a cum sprite

horny and bottomless

truly a hellish predicament

Lightly concealing his identity behind a generated anime avatar may be the wisest thing that kid ever did

The kid arguing for deepfake porn ofc sees no problem with Ghiblifying himself with his plagiarism machine. Total fucking douchebag status.

“How dare you suggest that we pivoted to SlopTok and smut because of money if something that we totally cannot do right now is more lucrative?”

Really, Colin?

I might be behind the curve on this one, but ice are now using halo (the computer game) images in recruitment ads, and referring to immigrants (and people who look like immigrants, i guess) as “the flood”, the all-consuming alien horde who are one of the antagonists of the series.

Given how microsoft are happy to contribute to the development of the epstein ballroom, I can only assume that they’re cool with all this.

https://aftermath.site/microsoft-halo-dhs-ice-trump-flood

alt text

A screenshot of a twitter post by the department of homeland security, showing an image from the halo video game series and the text “finishing this fight”, “destroy the flood” and a link to “join ice gov”.

Unfortunately, the music director Marty O Donnell (iirc?) for og halo games is a bug trumper and ran for office. And he made absolute banger sound tracks too!

https://www.gamefile.news/p/halo-ice-developers-react

Dev team leads at least denouncing it as the disgusting shit it is.

That’s depressing… I really liked the music direction of halo. It really stood out to me in a way that other games never manage. I can still hum the halo theme and a bunch of its score, but I’d be hard pressed to do that with any other game… I know the elder scrolls theme, I guess, but can’t remember much else about their sound design.

if you enjoy older elder scrolls music, don’t ask too many questions about jeremy soule either.

Not so much “enjoy” as “remember at all, unlike most of the other games I’ve played in the last 10 years or so”, but I take your point.

at least the dude who writes most music for elder scrolls online seems to be decent enough.

Well, as far as I can tell, we still have Nile Rodgers.

bet they wouldn’t take kindly to Wolfenstein spamming in return

NB: a few cocktails in. Don’t really have a point here. Everything sucks, including this.

Halo: CE was written in the late 90s in the US, so it’s pretty clear that it exists as a metaphor for conflict in the Middle East. It’s initially humans (really space 'muricans) vs. the covenant (an ancient, religious empire with many references to abrahamic religion). The MC is a genetically modified supersoldier. Most shooters are fascistic military propaganda, intentional or no.

Bungie made Marathon before Halo and it’s basically the same plot - supersoldier aided/hindered by AI/s fights an alien force consisting of many “integrated” species. It’s a cheap way of making different enemies that are all antagonists.

OFC why the colony ship Marathon needed a supersoilder on tap is never explained. After a while our protag gets involved in a rebellion against the Pfor’s leaders and then we get Infinity which is just weird. Oh and there’s an eldrich horror living in a star too.

Hey now, it’s also a clearly copy and pasted plagiarism of James Cameron’s Aliens!

I got more “the thing” vibes, tbh.

deleted by creator

Master Chief, you mind telling me what you are doing on that ICE propaganda?

Performing the SPARTAN Program’s original aim, sir.

hmmm, gotta name my future scifi franchise’s augmented monastic space marin-time infantry supersoldiers THEBANs

(yes homo)

Yeah silence is being complicit in this case.

Trump also posted an image of him in the masterchief suit. Without a helmet. Halo is not my thing but I think that is a thing which is not done, like with judge dredd, the helmet stays on.

Apologies for doing journal club instead of sneer club.

Voiseux, G., Tao Zhou, R., & Huang, H.-C. (Brad). (2025). Accepting the unacceptable in the AI era: When & how AI recommendations drive unethical decisions in organizations. Behavioral Science & Policy, 0(0). https://doi.org/10.1177/23794607251384574

abstract:

In today’s workplaces, the promise of AI recommendations must be balanced against possible risks. We conducted an experiment to better understand when and how ethical concerns could arise. In total, 379 managers made either one or multiple organizational decisions with input from a human or AI source. We found that, when making multiple, simultaneous decisions, managers who received AI recommendations were more likely to exhibit lowered moral awareness, meaning reduced recognition of a situation’s moral or ethical implications, compared with those receiving human guidance. This tendency did not occur when making a single decision. In supplemental experiments, we found that receiving AI recommendations on multiple decisions increased the likelihood of making a less ethical choice. These findings highlight the importance of developing organizational policies that mitigate ethical risks posed by using AI in decision-making. Such policies could, for example, nudge employees toward recalling ethical guidelines or reduce the volume of decisions that are made simultaneously.

so is the moral decline a side effect, or technocapitalism working as designed.

so is the moral decline a side effect, or technocapitalism working as designed.

AI is an accountability sink by design, its technocapitalism working as designed

as a bonus, it’s also fascist!

I would adore an awful journal club tbh

The computer-science section of the arXiv has declared that they can’t put up with all your shit any more.

arXiv’s computer science (CS) category has updated its moderation practice with respect to review (or survey) articles and position papers. Before being considered for submission to arXiv’s CS category, review articles and position papers must now be accepted at a journal or a conference and complete successful peer review. When submitting review articles or position papers, authors must include documentation of successful peer review to receive full consideration. Review/survey articles or position papers submitted to arXiv without this documentation will be likely to be rejected and not appear on arXiv.

from the folks who brought you

we’ve trained a model to regurgitate 19th century pseudoscience

the field of computer science presents: How to destroy a public good by skipping all the required reading in your liberal arts courses

Grokipedia just dropped: https://grokipedia.com/

It’s a bunch of LLM slop that someone encouraged to be right wing with varying degrees of success. I won’t copy paste any slop here, but to give you an idea:

- Grokipedia’s article on Wikipedia uses the word “ideological” or “ideologically” 23 times (compared with Wikipedia using it twice in it’s Wikipedia article).

- Any articles about transgender topics tend to mix in lots of anti-transgender misinformation / slant, and use phrases like “rapid-onset gender dysphoria” or “biological males”. The last paragraph of the article “The Wachowskis” is downright unhinged.

- The articles tend to be long and meandering. I doubt even Grokipedia proponents will ultimately get much enjoyment out of it.

Also certain articles have this at the bottom:

The content is adapted from Wikipedia, licensed under Creative Commons Attribution-ShareAlike 4.0 License.

Decided to check the Grokipedia “article” on the Muskrat out of morbid curiosity.

I haven’t seen anything this fawning since that one YouTube video which called him, and I quote its title directly, “The guy who is saving the world”.

Interesting that for the musk article, it has the “see edits” button disabled. ha

E:

I peeked under the hood, “see edits” data is in page.fixedIssues on the api, ripe for scraping: https://grokipedia.com/api/page?slug=StarCraft_II&includeContent=false

To start this spooky Stubsack off, there’s signs Framework are being slow on the refunds:

Just a heads up I haven’t gotten a refund from my cancelled FW12 order. Framework seems to be having trouble figuring it out.

I don’t know why, maybe it is Canada or maybe it is a high volume of similar requests, but it is a sign I always find concerning in a company I am worried about the financial stability of.

Could be nothing, but if you have been wavering on a cancellation I figured you might want a heads up.

This comes two weeks after Framework’s public fash turn, and just a few days after their latest double down. “Go fash, lose cash” proves itself again.

i’m trying to sell mine now

but also i don’t have any other computers and probably can’t afford anything

time for me to learn to use a pencil

The market should be flooded with used business laptops that can’t be upgraded to Windows 11 but will take an easy Linux distro

oh fuck, i didn’t think of that! thank you for the idea 💖

lightly used thinkpads are the classic choice for this — IT departments buy high spec ones then dump them for cheap a few years later in surplus sales or on eBay, and there are usually repair manuals and spare parts readily available. usually you can type the specific model and generation into a search and get a wiki page or at least a couple blog posts reporting how well they’re supported under linux, and Lenovo seems to intentionally do very well on compatibility since Linux compatibility is a nice checkbox for an enterprise laptop to have. just be careful you don’t get bamboozled into buying any of Lenovo’s consumer laptops, since they tend to be a fair bit cheaper and don’t have the same compatibility guarantees, repairability, or ample spare parts availability.

For sale: lenovo thinkpad, lightly used

-Earnest Hempingway

my laptop is a budget model from 2016 and it runs xfce smoothly and happily lol. i code on it and watch streams and play slay the spire and all the usual stuff. idk how the stylus changes things but the required specs for doing quite a lot with linux are negligible

thank you for the suggestion, this is good stuff.

Was on the lookout about a year ago, didn’t find promising enough back than, granted only checked a few places. Granted, I did want an ok GPU.

deleted by creator

And on the subject of microsoft, this is a splendid way to describe the both that specific company, the us tech sector as a whole and entire us government for that matter:

“We will build the tools of genocide, but never a sex bot” is such a condemnation of American society lolsob

https://xoxo.zone/@Ashedryden/115452105359019979

It was posted in reference to this article on the MIT technology review site, which gets an archive link because it has two overlapping cookie opt-out popups: https://archive.is/KhMqT

It is an interview with microsoft’s mustafa suleyman, their head of ai. For all he claims to think that chatbots pretending to be people is bad, I don’t see him actually doing a whole lot about it.

but never a sex bot

Not speaking for myself (because we were a gamecube household) but based on my internet travels, Cortana (from Halo, also in subject) was a sexual awakening for a lot of people. So maybe when he says “we” he only means the present cohort of microsofties.

Yeah, skintight palmtop hologram cortana certainly ticked some boxes there, but in-universe it was all a bit “everyone is beautiful, no-one is horny”, with a side order of “all assistants should be female and sexy”, to my mind at least.

two overlapping cookie opt-out popups:

Love when this happens and on your phone you cant even reach the buttons. The lost art of testing your websites.

The lost art of testing your websites.

i run with javascript disabled by default, and it’s actually refreshing when a website at least displays “this shit requires javascript lol” instead of just not working

the modern web sucks, let’s all train ravens like asoiaf

long hand of casey newton (by proxy) outputs a weird hit piece on ed zitron in wired https://archive.is/chsCw so far bluesky in shambles with no other effects

i have mixed feelings here. on the one hand, a lot of the article hinges on the suggestion that zitron is somehow concealing that he works with AI companies. i’ve listened to his podcast, i’ve read his articles, he is pretty up front about what his day job is and that he is a disappointed fanboy for tech. the dots are 1/1000th of an inch apart. it also devotes a remarkable amount of time to remarks from casey newton and the like, who have nothing to offer the world.

on the other hand, i do find it genuinely repulsive that he’ll work with a company like DoNotPay. while it might be hackwork to suggest he’s concealing it, I don’t like the association whether he’s open about it or not.

on the… third hand? when i’ve read his posts, i’ve found myself totally unable to evaluate his financial claims. the evidence always seems unimpeachable, i just do not know whether the conclusions he draws from that evidence make sense, so i never cite him. i think a more honest and interesting version of this article, one that went further than trying to insinuate he’s an ignorant fraud, would involve collaborating with someone with a lot of financial expertise and examining how rigorous his work actually is. but wired apparently wasn’t interested in trying to make that article happen

He, or someone, should work with Bethany McLean on checking Zitron’s work. She cowrote The Smartest Guys In The Room about Enron in 2003 and a book about the 2008 financial crisis. In 2001 she wrote about thinking something was hinky about Enron’s financial filings.

I think Zitron has posted that none of these companies is profitable. Midjourney claims to be making a profit since 2024 although that depends on not paying for the IP they use etc. etc. etc. (and private companies can claim all kinds of things about their balance sheets without the CEO going to jail if they are creative).

his conclusions are a lot more complex than “none of these companies is profitable”

none

When faced with a long complicated argument outside your competence, its a really useful heuristic to spot-check a few sections and assume that if they are wrong the whole structure is flawed. And at least as many readers will take away the soundbites like “none of these companies is profitable” and “pathetic revenues” as any nuanced version that is hidden in there. At critics of spicy autocomplete go he is really far on the “pundit” end of the “academic to pundit” scale (well past our David Gerard).

i see. i misunderstood your previous post, thought you meant that “none of these companies is profitable” is essentially his only conclusion and that you considered it justifiable enough

I think Zitron has some important analysis mixed up with the clickbait and the populist rhetoric. I thought he was trying to be a full-time blogger but now I see he runs a one-person PR business (!)

looks pretty good to me, I’d be delighted to produce this sort of work and he’s doing loadbearing work on the numbers here - that the finance press is faintly catching up to a year later.

Its too bad that Patrick McKenzie sided with the promptfondlers because he was a useful ally calling “we need more reporting on cryptocurrency by journalists who can read a balance sheet and do arithmetic”

i’ve listened to his podcast, i’ve read his articles, he is pretty up front about what his day job is and that he is a disappointed fanboy for tech. the dots are 1/1000th of an inch apart.

For comparison I’ve only read Ed’s articles, not listened to his podcasts, and I was unaware of his PR business. This doesn’t make me think his criticisms are wrong, but it does make me concerned he’s overlooked critiquing and analyzing some aspects of the GenAI industry because of these connections to those aspects.

Zitron was a blogger now, doing enjoyable bloggy things like hanging rude epithets on CEOs and antagonizing the normie tech media. Kevin Roose and Casey Newton, the hosts of the New York Times’ relatively bullish Hard Fork podcast, quickly became prime targets. They’re too friendly with their subjects, says Zitron, who called Hard Fork a case study in journalists using “their power irresponsibly.” He recalls having pitched Newton once in his capacity as a flack, but nothing came of it. Newton, for his part, remembers meeting Zitron somewhere, maybe a decade ago, and Zitron saying something like, “I would really like to be friends.” Nothing came of that, either.

I will choose to read this as: newton mad that they arent pals with zitron

TBH I am neutral on zitron. I don’t read his stuff on the reg, just when it pops up here and I feel like it. We all belong to the same hypocrisy. If he’s pushed AI companies before through his PR firm, that sucks.

Baldur Bjarnason’s (indirectly) given his thoughts on the piece, treating its existence (and the subsequent fallout) as a cautionary tale on why journalistic practices exist and how conflicts of interest can come back to haunt you.

(In particular, Baldur notes that Zitron could’ve nipped this problem in the bud by firing his AI-related clients after he became the premier AI critic.)

Maybe more importantly, for his readers and listeners, Zitron holds out the seductive promise of some great comeuppance for the industry. Justice, of some kind, for an audience that isn’t seeing much of it in evidence anywhere. “I do not think this is a real industry,” he has written, “and I believe that if we pulled the plug on the venture capital aspect tomorrow it would evaporate.” When On the Media asked how he could be so certain that a collapse was coming, he replied, “I feel it in my soul.”

Yeah this cannot be bad journalism, it has to be intellectual dishonesty. Someone paid for a hit piece for sure.

Ah that explains why people were talking about Ed critics. When it reached my feed it had already devolved into other convos about Zitron haters.

(And yes he isnt flawless, but that just means we need more people in the anti AI space).

In my day people were ashamed of being mad in the newspaper.

wint @dril 29 Dec 2014 and another thing: im not mad. please dont put in the newspaper that i got mad.

WTF I was doing all this in EMACS in 2008.

As highly requested

who the fuck requests this shit, these people, their customers, their products and dcs could be swallowed by earth tomorrow with only upsides for everyone else

lord, this is so cursed, especially the gambling (though you could say all vibe coding is gambling, ha)

Almost gave this a reflexive down vote. Even for YC, this startup is tremendously awful.

Thank you for sharing!

wait this isn’t a joke this is a yc funded startup

It also integrates Stake into your IDE, so you can ruin yourself financially whilst ruining the company’s codebase with AI garbage

this is not web scale, you need crypto trading to scale gambling losses

It is sunday, so time to make some posts almost nobody will see. I generated a thing:

Image description

3 screenshots from a The Simpsons episode. Bart is sitting in his class and the whole class in the first panel says “Say the line” with eyes filled with expectation and glee, next panel a sad downlooking Bart says “AI is the future and we all need to get on board”, third panel everybody but Bart cheers.

If these plugs are too much, please let me know: the second episode of our podcast on historical bigoted/misogynist texts is out now. “Woman, thy name is russia.”

https://bsky.app/profile/odiumsymposium.bsky.social/post/3m4cjzttdek2f

do please continue!

(i mean, like i can talk)

Just subbed on podcast addict!